🚀 Demystifying Compute-in-Memory: The Next Big Leap in AI Hardware

Introduction

As artificial intelligence (AI) continues to redefine the boundaries of what's possible, the demand for faster, more efficient computation is skyrocketing. But traditional computing architectures like Von Neumann, Harvard architecture are struggling to keep up, especially when it comes to handling massive volumes of data for machine learning and deep learning workloads.

Enter Compute-in-Memory (CIM) a groundbreaking architectural shift that is turning heads in the world of hardware design and AI acceleration. Instead of relying solely on data movement between memory and processor, CIM proposes a bold solution: why not compute right where the data is stored?

In this blog, we'll unpack the what, why, and how of Compute-in-Memory, exploring its advantages, challenges, and potential to power the future of intelligent systems.

What is Compute-in-Memory?

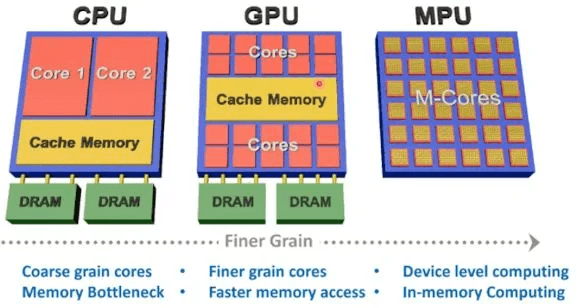

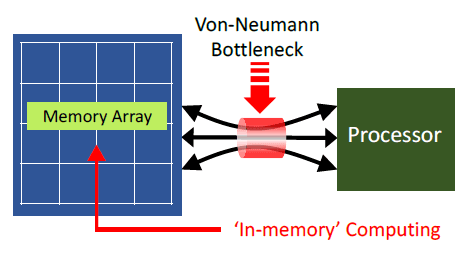

Traditionally, computers follow the von Neumann architecture, where data and instructions are stored in memory and must travel to a separate processing unit (CPU or GPU) to be executed. This constant data shuttling creates a major bottleneck called the memory wall, especially in data-intensive tasks like neural network inference or training.

Compute-in-Memory (CIM) breaks this norm by performing computations directly inside or near the memory arrays, particularly for matrix-vector multiplications (MVM) — the backbone of most deep learning operations.

This is typically achieved using emerging memory technologies like:

SRAM (Static RAM)

RRAM (Resistive RAM)

PCM (Phase Change Memory)

MRAM (Magnetoresistive RAM)

In CIM, these memory arrays double as computational elements, drastically reducing the need to move data between memory and processors.

Why is CIM Needed?

1. Breaking the Memory Bottleneck

Deep neural networks (DNNs) require millions to billions of MAC operations (Multiply-Accumulate). In von Neumann systems, fetching operands from memory becomes a power-hungry and time-consuming process.

2. Energy Efficiency

A shocking amount of energy in AI workloads is spent not on computation, but on data movement. CIM cuts this overhead by keeping computation inside the memory fabric.

3. Higher Throughput

Since memory arrays can perform computations in parallel, CIM enables massive throughput, especially for inference workloads.

4. Better Area Efficiency

For specific tasks like MVM, analog-CIM solutions (e.g., using RRAM crossbars) can pack more operations into a smaller area than digital logic.

How Does CIM Work?

There are two primary flavors of Compute-in-Memory:

🔹 Analog CIM

Uses Ohm’s law (V=IR) and Kirchhoff’s law to perform vector-matrix multiplications inside crossbar arrays.

Most common with RRAM and PCM.

Pros: Very energy- and area-efficient.

Cons: Limited precision, affected by noise, variability, and non-idealities.

🔹 Digital CIM

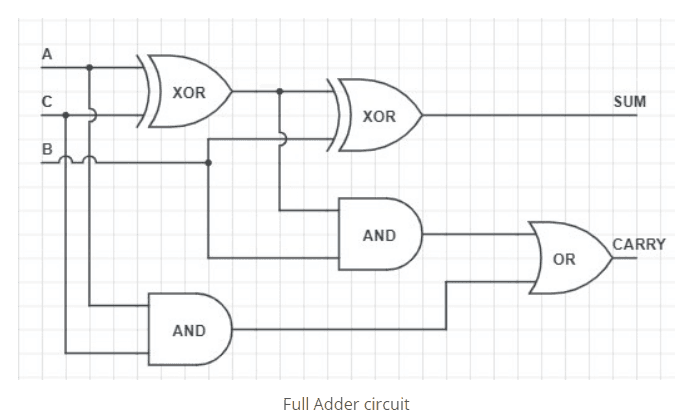

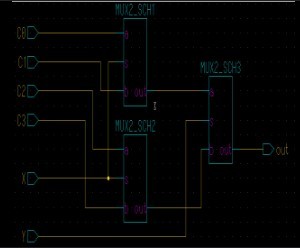

Performs Boolean or binary arithmetic inside digital SRAM arrays using modified bitcells or peripheral circuitry.

Often easier to integrate with digital design flows.

Pros: Higher precision, better reliability.

Cons: Slightly more power and area compared to analog.

Challenges and Limitations

Despite its potential, CIM isn’t without hurdles:

1. Precision Limitations

Analog CIM often suffers from low-bit precision (e.g., 4–6 bits), which may not suffice for all tasks.

2. Non-Idealities

Noise, variability, IR drop, and parasitics affect analog crossbar reliability.

3. Scalability

Integrating CIM into full-system architectures (e.g., with control logic and memory hierarchies) is still under active research.

4. Lack of Standard Tools

Unlike CPU or GPU development, CIM doesn’t yet have mature compilers, toolchains, or simulators (although frameworks like NeuroSim and CIMFlow are emerging).

Applications of CIM

AI Inference Engines: Edge devices like smart cameras, wearables, and autonomous drones can benefit from CIM’s efficiency.

IoT Devices: Low-power CIM cores can run small models on sensor data in real-time.

Neuromorphic Computing: CIM is often used in brain-inspired architectures.

Security Hardware: On-chip CIM can provide fast encryption and pattern matching.

Compute-in-Memory represents a paradigm shift in how we think about processing — pushing the boundaries of efficiency by embracing in-situ computation. While challenges remain, its potential for revolutionizing AI workloads is undeniable.

If you're passionate about computer architecture, memory design, or AI acceleration, now is the perfect time to explore this exciting frontier.